.

import requests

from bs4 import BeautifulSoup

import urllib.request

url = requests.get(

https://www.ctrip.com/?allianceid=1069292 sid=1966769

)

html = url.text

soup = BeautifulSoup(html, html.parser )

girl = soup.find_all( img )

x = 0

for i in girl:

url = i.get( src )

if url==None:

pass

else:

if url[0:6]!= https: :

url= https: +url

if url[-3:]== png :

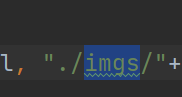

urllib.request.urlretrieve(url, ./imgs/ +str(x)+ .png )

x += 1

print( 正在下载第%d个 % x)

elif url[-3:]== jpg :

urllib.request.urlretrieve(url, ./imgs/ +str(x)+ .jpg )

x += 1

print( 正在下载第%d个 % x)

else:

urllib.request.urlretrieve(url, ./imgs/ +str(x)+ .jpg )

x += 1

print( 正在下载第%d个 % x)能不能把所有都爬下来啊

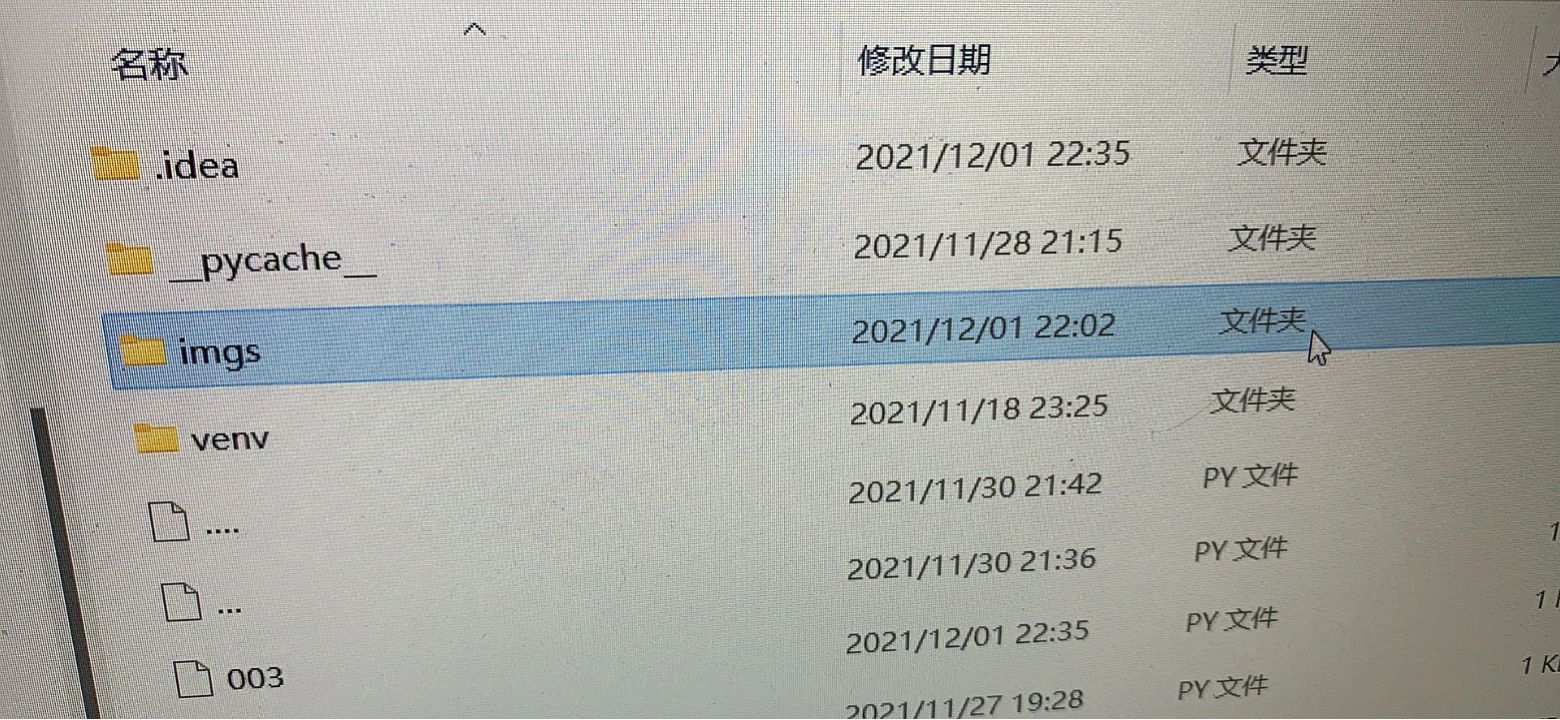

创建一个这个目录

不止图片

这样吗

嗯

好的,谢谢